Lived experience with AI

Our Finance/Economics/AI correspondent attempts to make a Ray Dalio bot.

I get excited about technology. I will never forget the first time I used a Mac or got my hands on the early Blackberry. These devices were transformative in how quickly they became intertwined in daily life. They changed the way we create information and communicate. In recent memory, no technology has received as much hype as AI (or AGI) about changing everything.

I played with ChatGTP when it was released and thought it was remarkable. However, it didn’t get incorporated into my daily workflow like computers and mobile devices. When OpenAI released the ability to make your own GPTs (Custom GPTs), I figured that we were ready for prime time.

Custom GPTs Promise Your Own Chatbots

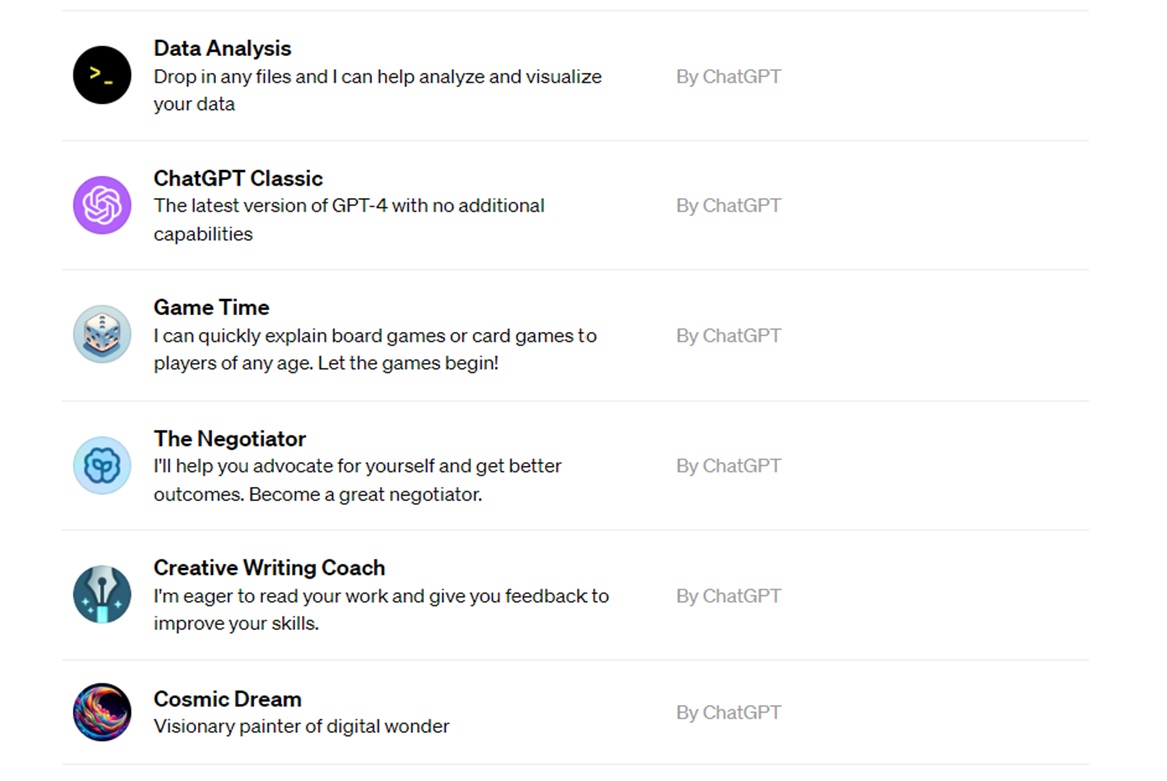

The OpenAI Developer Day highlights Custom GPTs as a way to make your own sophisticated AI chatbots powered by GPT-4. GPT-4 is the most potent AI model ever developed, with 1.8 trillion parameters. It is said to be on the path to AGI (Artificial General Intelligence). It will equal or surpass our intelligence. In terms of Custom GPTs, you can include your own instructions. You can have your own reference data. You can ask the user to import information. All you need to do is to explain in English what you want the application to do. Sam Altman highlights a number of apps that OpenAI has developed.

Selection of Sample Custom GPTs Developed by OpenAI

Building Your Custom GPT is Easy

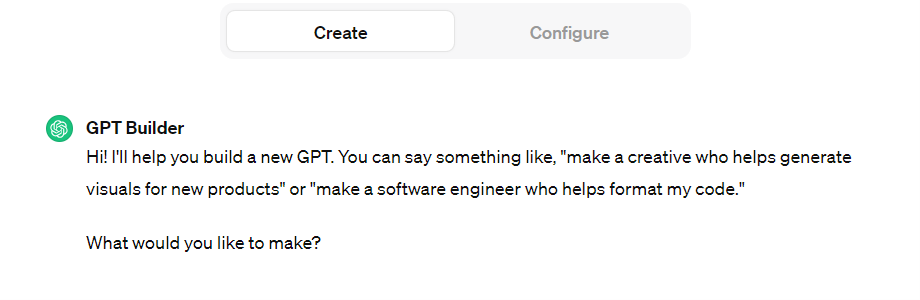

The process for building your own custom GPTs is straightforward. OpenAI has created a chatbot that walks you through the process. How very clever. The two examples are inspirational. I could make a marketing genius for developing product visuals or a 10x software engineer for formatting my code. The Mac was excellent, but this seems revolutionary.

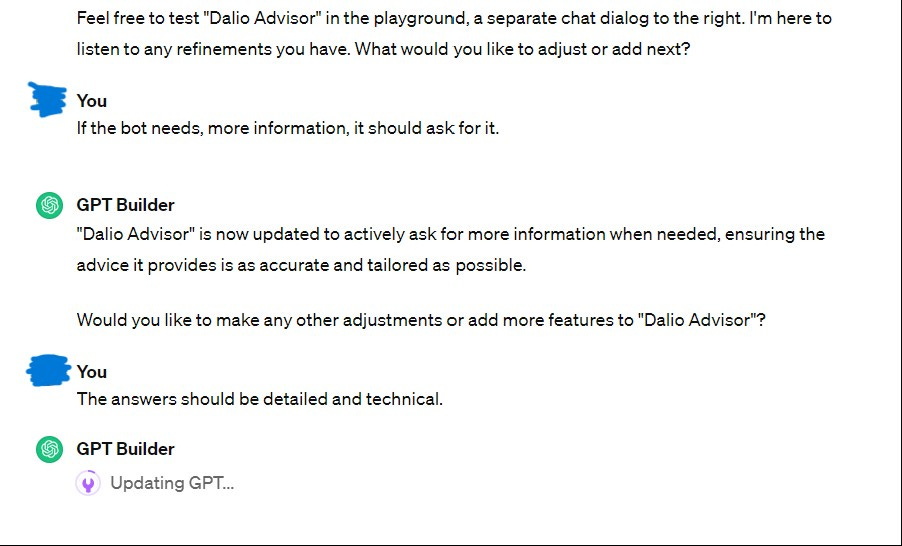

GPTBuilder Walks You Through Developing Your Own GPT

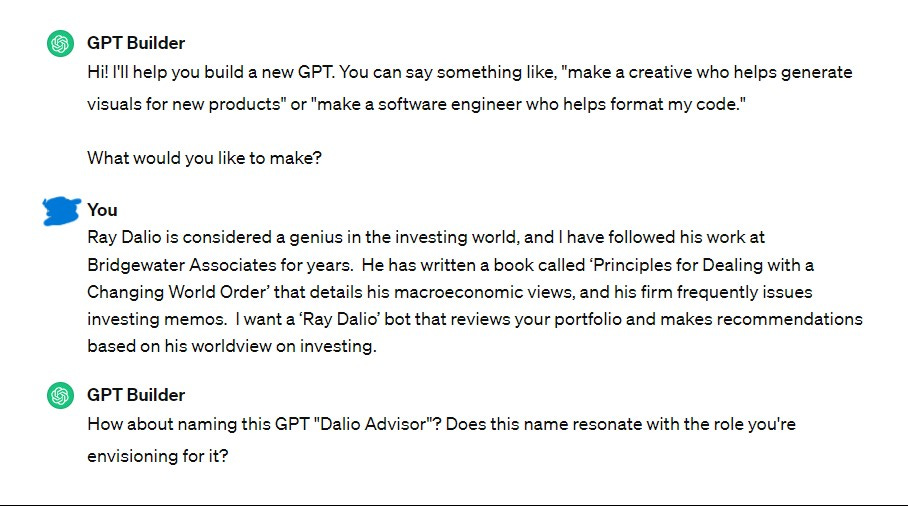

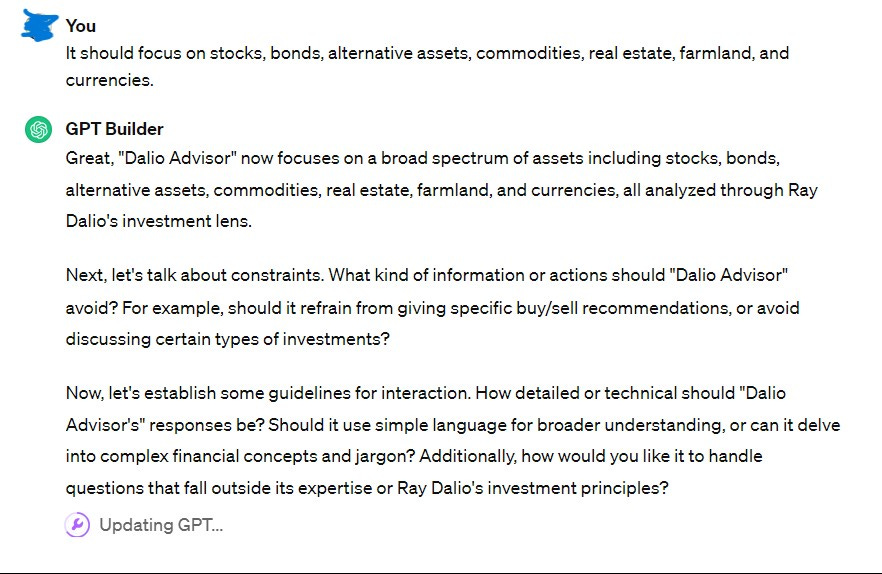

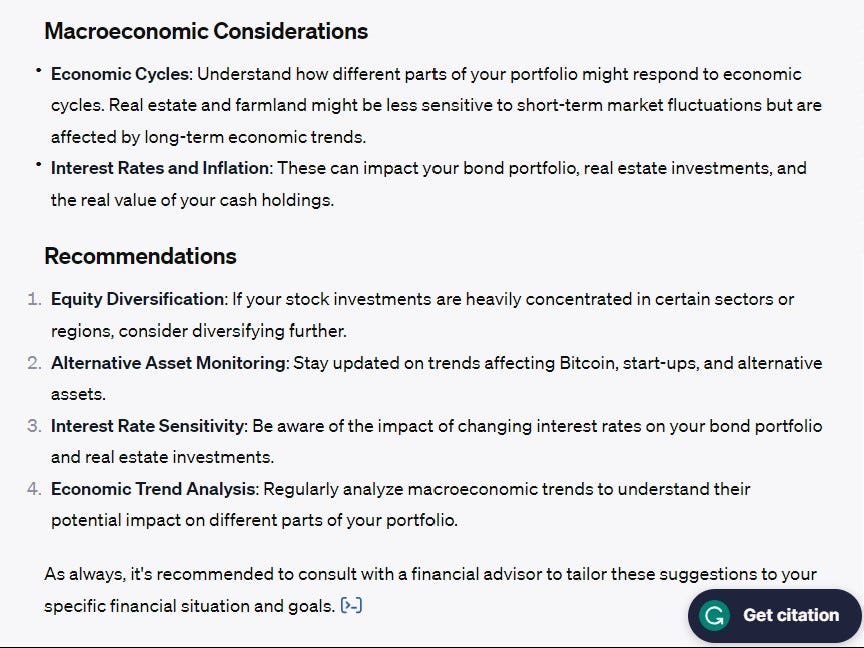

I have a precise idea in mind. Ray Dalio is considered a genius in investing, and I have followed his work at Bridgewater Associates for years. He has written a book called ‘Principles for Dealing with a Changing World Order’ that details his macroeconomic views, and his firm frequently issues investment memos. I want a ‘Ray Dalio’ bot that reviews your portfolio and makes recommendations based on his worldview on investing.

Is that really all I need to do to put Dalio out of a job? I’ll be hanging out in Davos drinking champagne with all the pretty gold diggers real soon! The last step is to finalize the logo and answer a few more questions. We are done.

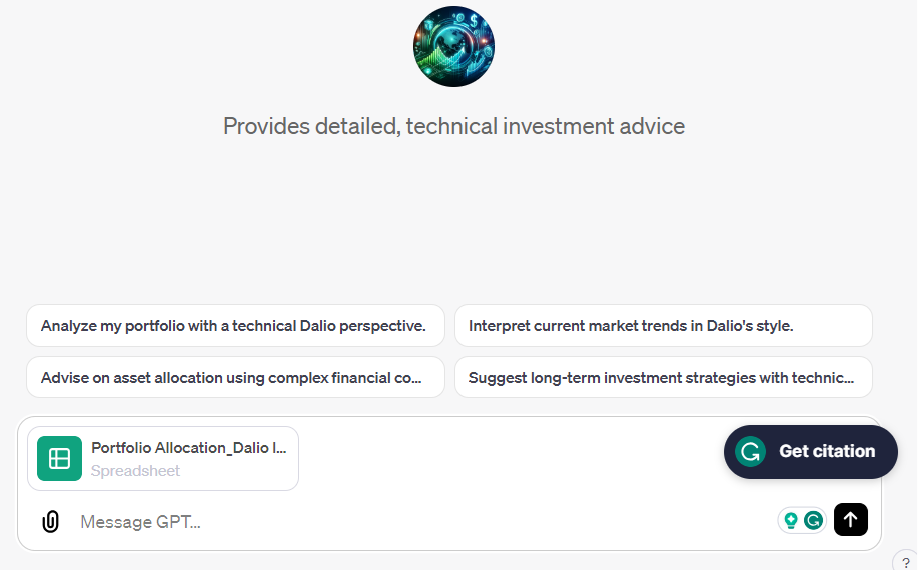

I think we are ready to make me some money.

Ray Dalio GPT Is Lazy

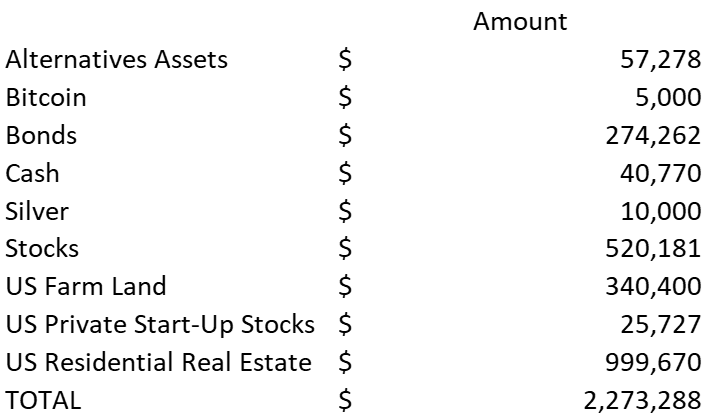

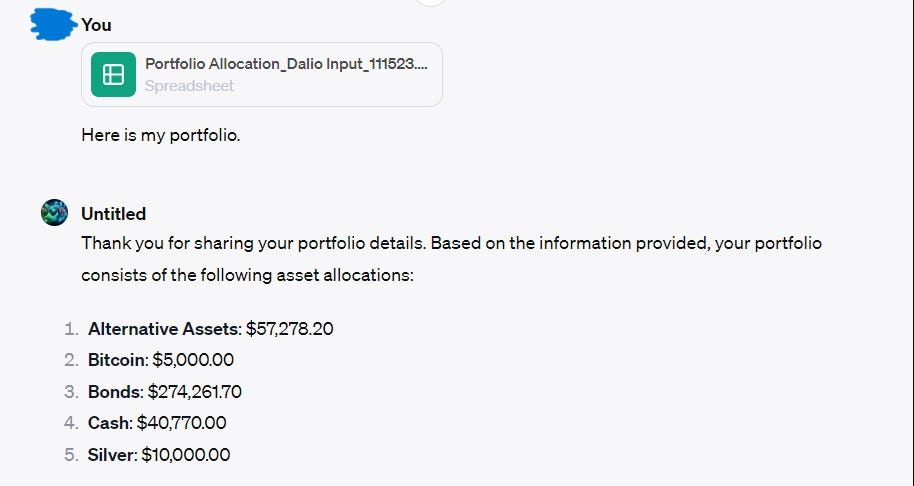

I have the following made-up portfolio that I would like to analyze. I’ve constructed it based on Dalio’s general principles. In the book ‘Principles for Dealing with a Changing World Order,’ Dalio correctly predicted that we would enter an inflationary period where governments would print excess money. As a result, a substantial portion of the portfolio is in US farmland and US Residential Real Estate, which should be inflation hedges.

Here goes. I upload the portfolio and click on ‘Analyze my portfolio with a technical Dalio perspective”.

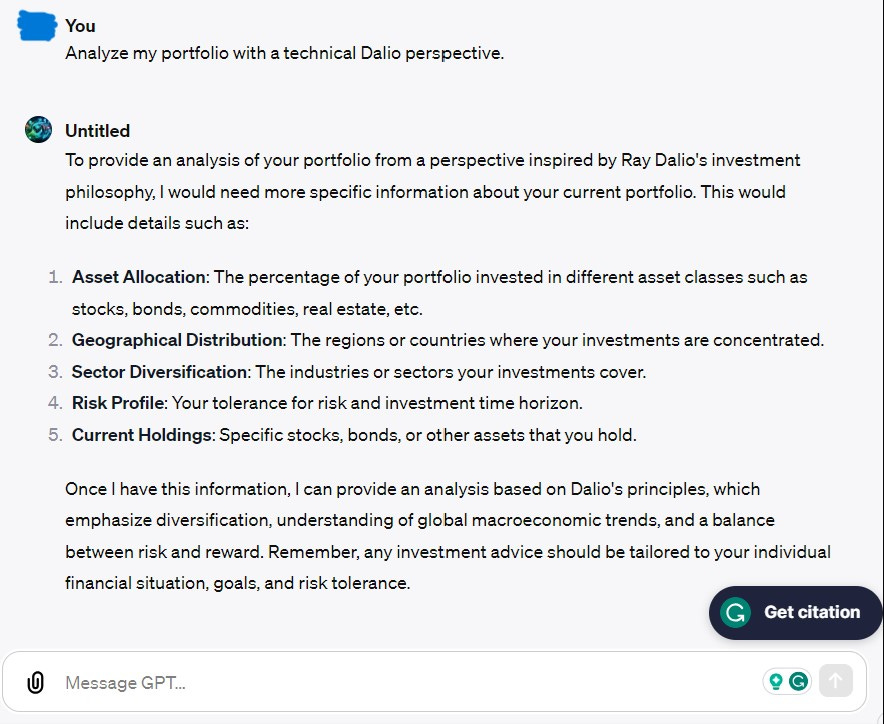

That didn’t work. It says it needs my portfolio. Maybe I didn’t upload it. Let’s try this again.

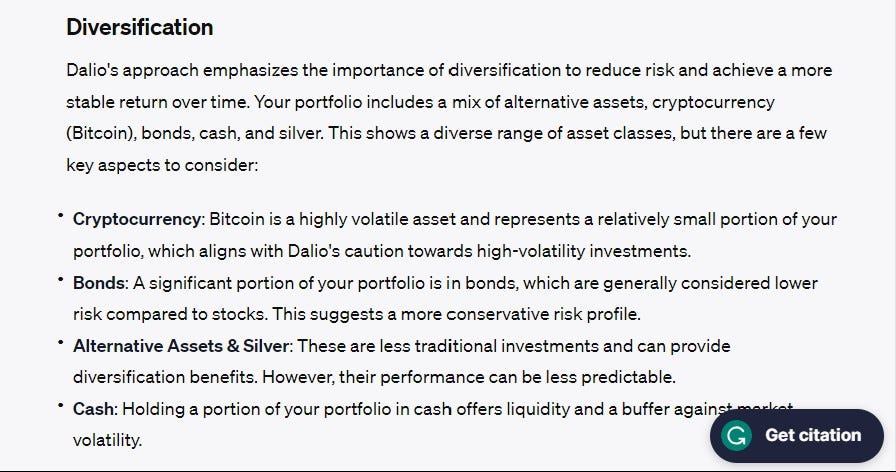

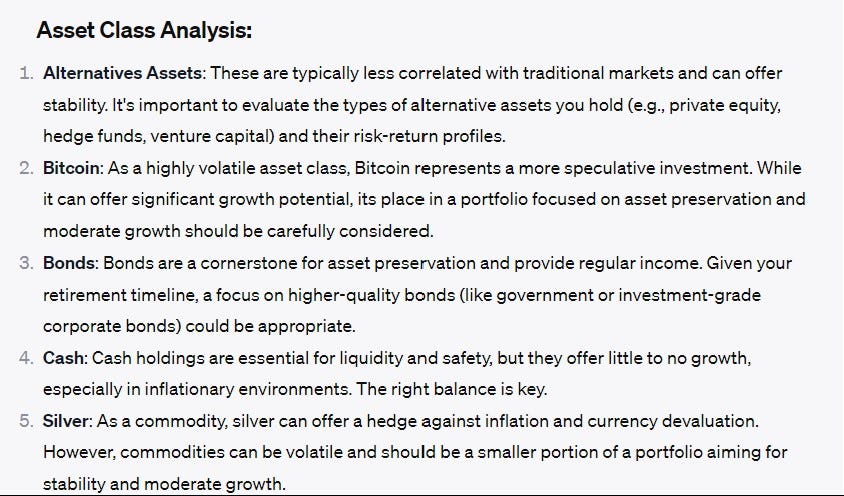

Huh. Where are my inflation hedges: farmland and real estate? It’s missing half my assets. The recommendations show that half the assets have not been analyzed.

Historically, the benefit of computers has been that they do what you ask them. Also, the real Dalio is not this lazy. I’ve met him. He is a meticulous pain in the ass.

Let’s see if we can get it to do all the requested work and analyze the entire portfolio. I haven’t argued with a computer before. I’ll try to be diplomatic. They are supposed to become our overlords. I don’t want an AGI to take it out on me because I was mean to his mother.

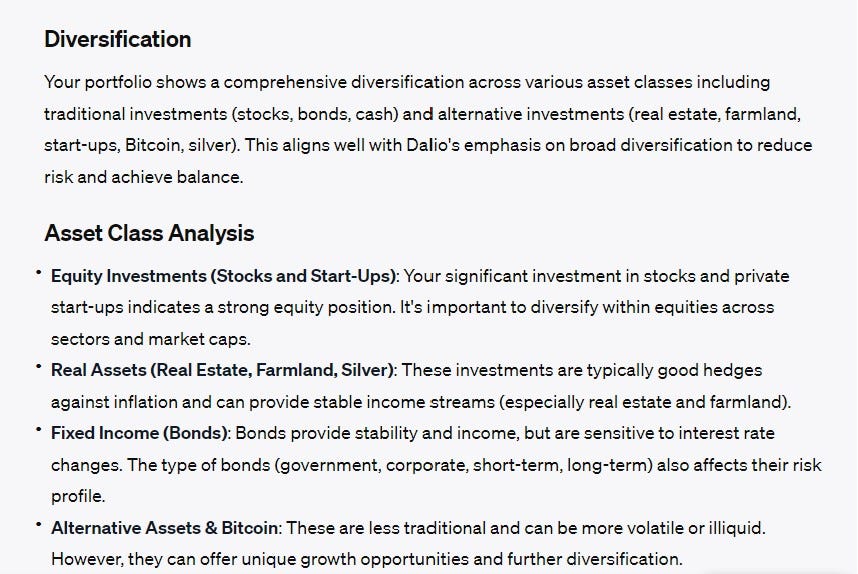

At least it now includes the entire portfolio. The observations are general but not incorrect. They are definitely not Dalio class and don’t address my specific asset allocation.

I still can’t wrap my head around why it initially only took half the portfolio. It must be some attribute of Large Language Models.

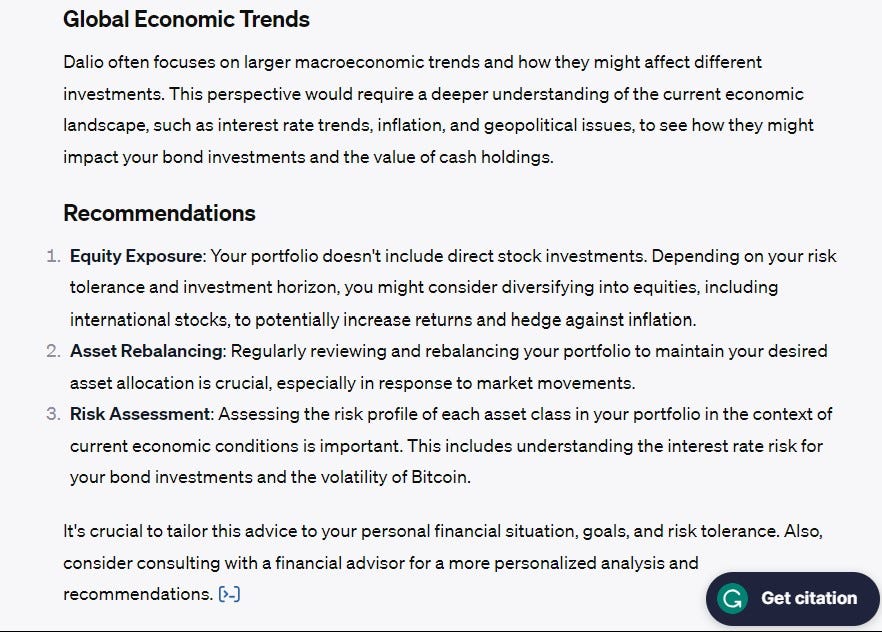

Sometimes ‘Magic’ Language Makes it Work Better

I’ve heard that LLMs can lose focus and not complete a task well. Apparently, ‘magic’ language can help them perform better. For example, ‘Breathe in and Focus’ or ‘This is Important for my job’ have been shown to make the Chatbot work better. I wish I were kidding. This is new technology. Maybe working with super-intelligence requires pleading. I’m game. Let’s put this at the beginning of the bot.

Here, we need to look at the ‘configuration’ section and update it with the ‘magic’ words.

Configuration Section of Custom GPT

Configuration Section of Custom GPT with ‘Magic’ Language

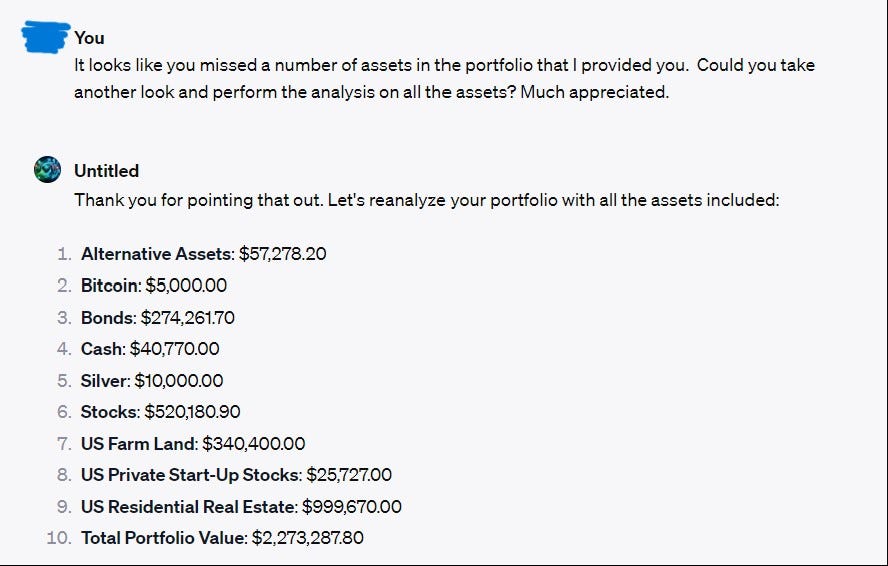

The bot’s answers are much longer and more detailed with the ‘magic’ language. It also asks for additional clarification questions. Indeed, it tries harder when you tell the task is more important. I wonder if it also uses more GPU power for inference. Funny. However, it still misses half the portfolio.

Bot Motivated by ‘Magic’ Language Asks Additional Clarification Questions

Bot Motivated by ‘Magic’ Language Still Misses Half the Portfolio

Dalio GPT ‘Hallucinates’ That I Own Gold

I’m OK to plead some more. I’ll ask it to include the full portfolio in the instructions so that the user doesn’t have to constantly correct the bot, which would be immensely frustrating.

Bot Motivated by ‘Magic’ Language and Request to Analyze Full Portfolio

WTF? It includes the full portfolio and, for good measure, adds Gold to the portfolio, which I would definitely know if I actually owned it. These LLMs are so weird. Imagine a bot that tracks your assets. In the process, it loses track of some of the assets and imagines ones you don’t have. What a cluster. It would make FTX look like a great steward of your wealth.

Perhaps it’s because Dalio talks about Gold, and many portfolios include Gold. However, in relation to my portfolio, it’s entirely irrelevant because there is no Gold.

You Will Give Up on Making Your GPTs Because AI is Dumb and Inconsistent

I spend another three days trying to make the portfolio ingestion work consistently. It never does. I discovered that any language in the middle of the instructions is often ignored. I discover that it makes different mistakes based on how you ask the question. Sometimes, it hallucinates a little bit. Sometimes it’s more. Ray Dalio and Bridgewater are safe.

…But You Can Use It to Write Rejection Letters for Medical Insurance Coverage

The experience led me to try to find use cases in the industry for LLMs (in a production environment). I listen to presentations by Boston Consulting Group, SAP, and the AI Breakdown. I read ‘The AI Delusion’ and ‘You Look Like a Thing and I Love You’, which both cover AI. I find one use case (allegedly in a production environment). It’s a Chatbot that takes an insured person’s information and writes a letter to them explaining why they are not being covered for a procedure. In this case, an imperfect word salad that follows rules of grammar would probably work (especially as you can’t get a hold of anyone at the insurance company anyway).

Maybe GPT is Just a Good Search and Summary Engine – It’s Not a Replacement for Humans

As an enthusiast for technology, I give new products the benefit of the doubt when they show benefits, even if they are much more limited than advertised. In trying to understand why LLMs are so strange in their hallucinations, ChatGPT was helpful in explaining that they seem to be an intractable problem. You can find the description below. For this, I’m thankful.

Large Language Models (LLMs) like GPT-4 can exhibit a behavior known as "hallucination," where they generate incorrect or nonsensical information. This phenomenon is rooted in the structural, analytical, and mathematical aspects of how LLMs are designed and operate. Let's analyze these factors:

### 1. **Structural Reasons**

- **Architecture**: LLMs are built on Transformer architecture, which excels in pattern recognition but lacks understanding. It processes text based on statistical patterns rather than comprehension, leading to plausible-sounding but inaccurate outputs.

- **Scale and Complexity**: The sheer size of these models (billions of parameters) makes it difficult to predict or control their output reliably, increasing the chance of hallucinations.

### 2. **Analytical Reasons**

- **Training Data Quality and Bias**: If the training data contains inaccuracies, biases, or contradictions, the model may learn and reproduce these flaws. The model's output is only as reliable as its input data.

- **Lack of World Knowledge or Common Sense**: LLMs do not have access to real-time or external information. They operate solely on the basis of their training data, which can be outdated or limited in scope.

### 3. **Mathematical Reasons**

- **Probability Distribution Limitations**: LLMs generate text based on probability distributions. Sometimes, the model might assign high probability to incorrect sequences due to overfitting or spurious correlations in the training data.

- **Token-Based Processing**: LLMs generate text token by token (word or character level). This sequential generation can lead to compounding errors, where one incorrect token influences the generation of subsequent tokens.

### Risks and Limitations

- **Unpredictability**: The complexity and opaque nature of these models make it hard to predict when and why hallucinations occur.

- **Overfitting**: Models might overfit to specific patterns in the training data, leading to errors in novel or out-of-distribution scenarios.

Art for today (not made with AI): Persimmons on a branch, oil on panel 12x16 in.

No comments:

Post a Comment